-Esta semana instale ruby on rails para la interfaz del portal grid también configure para que funcione en conjunto con apache

-Tambien realiza unas pruebas sobre una memoria personaliza esto con el fin paa tener una distribucion en ubuntu con todo lo necesario

http://es.wikibooks.org/wiki/Personalizar_distribuci%C3%B3n_de_Ubuntu_Live_CD

http://bloggeandolo.blogspot.mx/2010/01/crea-tu-version-personalizada-de-ubuntu.html

jueves, 12 de abril de 2012

CUDA Programming with Mathematica

Mathematica is a sophisticated development environment that combines a flexible programming language with a wide range of symbolic and numeric computational capabilities, production of high-quality visualizations, built-in application packages, and a range of immediate deployment options. With access to thousands of datasets and the ability to load external dynamic libraries and automatically generate C code, Mathematica is the most intuitive build-to-deploy environment in the market.

Mathematica’s CUDALink: Integrated GPU ProgrammingMathematica provides GPU programming support via the built-in CUDALink package, which gives you GPU accelerated linear algebra, discrete Fourier transform, and image processing algorithms. You can also write your own CUDALinkmodules with minimal effort.The CUDALink package included within Mathematica at no additional cost offers:

|

- Mathematica GPU Computing Guide [here]

- Mathematica CUDALink Tutorial [here]

- Mathematica OpenCLLink Tutorial [here]

- CUDA Programming within Mathematica – Wolfram whitepaper [here]

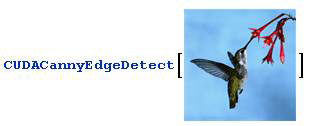

Example: Performing Canny edge detection

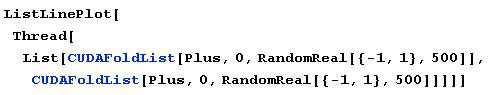

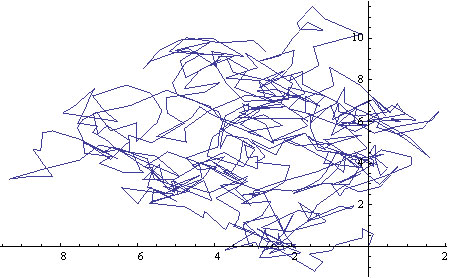

Example: Simulating a random walk

| The powerful GPU computing capabilities in Mathematica were developed on Tesla and Quadro GPU computing products and require the use of recent CUDA-capable NVIDIA GPUs. Tesla and Quadro GPU computing products are designed to deliver the highest computational performance with the most reliable numerical accuracy, and are available and supported by the world’s leading professional system manufacturers. To utilize Mathematica's CUDALink, the following is required:

|   |

|

|

NVIDIA Tesla and Quadro products are available from all major professional workstation OEMs. Only Tesla GPU computing products are designed and qualified for compute cluster deployment.

BUY OPTIMIZED TESLA SYSTEMS

We partner with our system vendors to provide optimal solutions that accelerate your workload. Buy now and enjoy all the benefits of GPU-acceleration on Mathematica.

We partner with our system vendors to provide optimal solutions that accelerate your workload. Buy now and enjoy all the benefits of GPU-acceleration on Mathematica.

MPICH-G2

MPICH-G2 is a Grid-enabled implementation of the popular MPI (Message Passing Interface) framework. MPI is especially useful for problems that can be broken up into several processes running in parallel, with information exchanged between the processes as needed. The MPI programming environment handles the details of starting and shutting down processes, coordinating execution (supporting barriers and other IPC metaphors), and passing data between the processes.

MPICH-G2 uses Grid capabilities for starting processes on remote systems, for staging executables and data to remote systems, and for security. MPICH-G2 also selects the appropriate communication method (high-performance local interconnect, Internet protocol, etc.) depending on whether messages are being passed between local or remote systems.

Should I use MPICH-G2?

One important class of problems is those that are distributed by nature, that is, problems whose solutions are inherently distributed, for example, remote visualization applications in which computationally intensive work producing visualization output is performed at one location, perhaps as an MPI application running on some massively parallel processor (MPP), and the images are displayed on a remote high-end (e.g., IDesk, CAVE) device. For such problems, MPICH-G2 allows you to use MPI as your programming model.

A second class of problems is those that are distributed by design, in which you have access to multiple computers, perhaps at multiple sites connected across a WAN, and you wish couple these computers int a computational grid, or simply grid. Here MPICH-G2 can be used to run your application using (where available) vendor-supplied implementations of MPI for intramachine communication and TCP for intermachine communication.

In one scenario illustrating this second class of problems, you have a cluster of workstations. Here Globus services made available through MPICH-G2 provide an environment in which you can conveniently launch your MPI application. An example is of this scenario is the Grid Application Development Software (GrADS) Project.

In another scenario you have an MPI application that runs on a single MPP but have problem sizes that are too large for any single machine you have access to. In this situation a wide-area implementation of MPI like MPICH-G2 may help by enabling you to couple multiple MPPs in a single execution. Making efficient use of the additional CPUs that are distributed across a LAN and/or WAN typically requires modifying the application to adjust to the relatively poor latency and bandwidth introduced by the intermachine communication. Two example applications are Cactus (winner of the Gordon Bell Award at SuperComputing 2001, see MPI-Related Papers) and Overflow(D2) from Information Power Grid (IPG).

| MPICH-G2 | |

| Northern Illinois University and Argonne National Laboratory | |

| Download from the MPICH website | |

| mpich-g@globus.org (must be subscribed before posting to the list) |

Suscribirse a:

Comentarios (Atom)